Microsoft Research Debuts Phi-2, New Small Language Model

What is Phi-2?

Phi-2 is a language model used for research and development of other language models, commonly known as artificial intelligence.

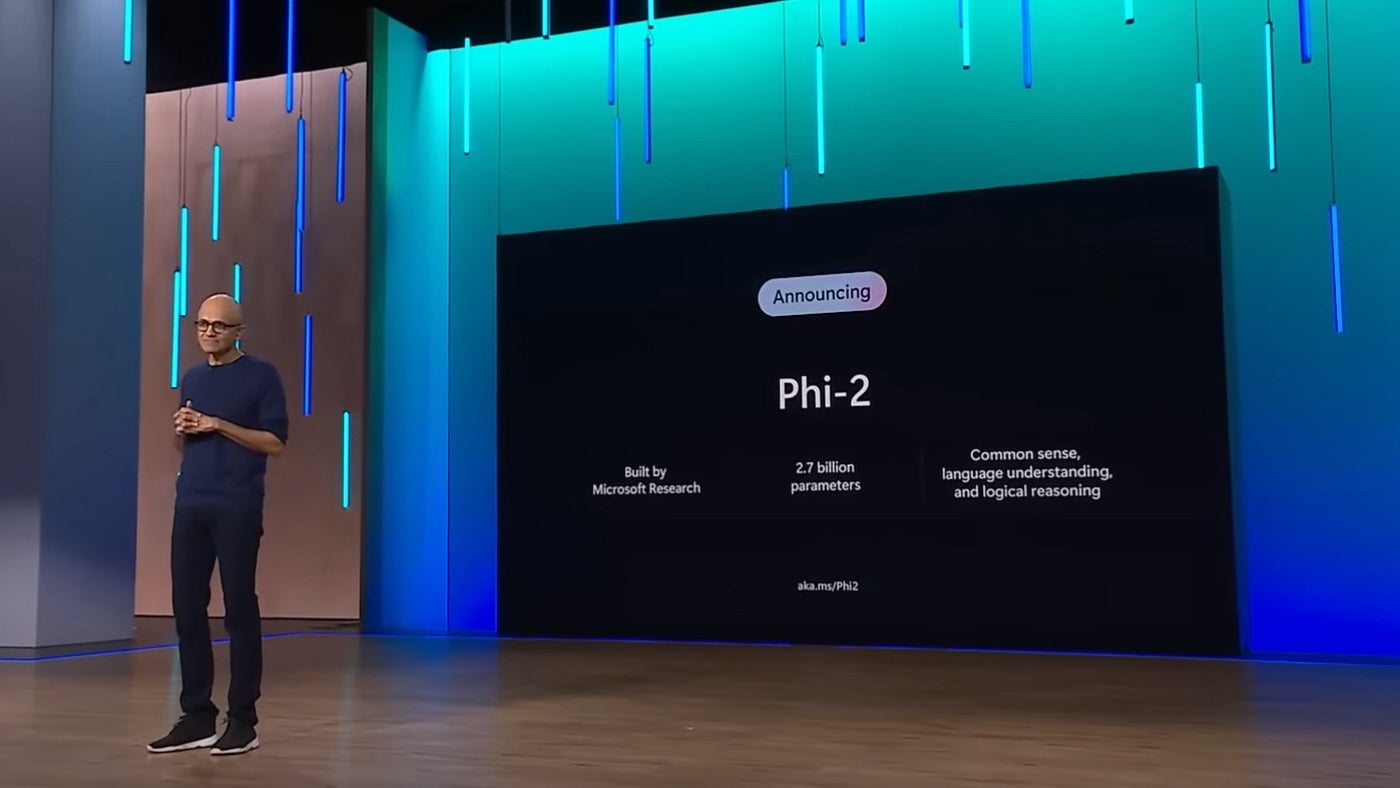

And, Phi-2 is the successor to Phi-1, a 1.3 billion parameter small language model that was released in September 2023. Phi-1 showed impressive performance on the HumanEval and MBPP benchmarks, which grade a model’s ability to code in Python. In November 2023, Microsoft Research released Phi-1.5, which added more common sense reasoning and language understanding to Phi-1. Satya Nadella announced Phi-2 during Microsoft Ignite in November 2023 (Figure A).

Figure A

“With its compact size, Phi-2 is an ideal playground for researchers, including for exploration around mechanistic interpretability, safety improvements or fine-tuning experimentation on a variety of tasks,” Microsoft Senior Researcher Mojan Javaheripi and Microsoft Partner Research Manager Sébastien Bubeck wrote in a blog post on Dec. 12.

“We are finding ways to make models cheaper, more efficient, and easier to train, and we feel it’s important to share what we’re learning so that the whole community benefits,” Bubeck told TechRepublic in an email. “… The size of Phi-2 makes it an ideal playground for their use, including for exploration around mechanistic interpretability, safety improvements, or fine-tuning experimentation on a variety of tasks.”

Phi-2 is outperforming larger language models

Microsoft Research says Phi-2 outperforms Mistral AI’s 7B (7 billion parameter) model and Llama-2 (which has 13 billion parameters) on standard benchmarks like Big Bench Hard and other language, math multi-step reasoning and coding tests. Microsoft Research tested Phi-2 against Google’s recently released Gemini Nano 2 and found that it performed better on the BBH, BoolQ, MBPP and MMLU tests.

How to make a smaller language model work like a large one

Microsoft Research found that smaller models can perform as well as larger ones if certain choices are made during training. One way Microsoft Research makes smaller language models perform as well as large ones is by using “textbook-quality data.”

“Our training data mixture contains synthetic datasets specifically created to teach the model common sense reasoning and general knowledge, including science, daily activities and theory of mind, among others,” Javaheripi and Bubeck wrote. “We further augment our training corpus with carefully selected web data that is filtered based on educational value and content quality.”

Another way to make a smaller language model perform as well as a large one is by scaling up. For example, the research team embedded the knowledge of the 1.3 billion parameter Phi-1.5 model into the 2.7 billion parameter Phi-2 model.

“This scaled knowledge transfer not only accelerates training convergence but shows clear boost in Phi-2 benchmark scores,” wrote Javaheripi and Bubeck.

Curled from TechRepublic